ChatGPT is the most recent natural language model from OpenAI, based on GPT-3.5. Here’s what it has to say about itself and why its generated so much interest in the last few weeks:

ChatGPT is a large language model developed by OpenAI. It has garnered significant attention in the news and on social media due to its ability to generate human-like text responses to a given prompt. Its advanced language generation capabilities have also raised concerns about the potential for it to be used for nefarious purposes, such as the spread of misinformation. Overall, ChatGPT has generated a lot of buzz due to its impressive language generation capabilities and the potential implications of this technology.Why is it so potentially disruptive?

It’s less to do with the quality of ChatGPT’s answers, and more to do with the fact that the quality and trustworthiness of search engines - and online content in general - is so poor. (Wikipedia being the one exception, even though it’s been long maligned as an untrustworthy source.)

Most people would happily gravitate towards an amalgamated, generalized intelligence over individual humans, with all of their flaws. This is likely why Google has started to add answers to questions at the top of their search results page. Because that’s what people want. They don’t want to have to navigate to a bunch of random websites to piece something together.

In many cases, when you come across a seemingly high-quality article, it’s written by a company that happens to sell something relevant to the content. The article is written to appear unbiased. But invariably, at the bottom of the article, they will recommend or at least mention their services, which calls into question the article’s validity. An example of this is a comparison of competing products. You can make that comparison look perfectly impartial, while quietly omitting your strongest competitor, or otherwise bending or leaving out significant bits of information here and there to create an impression that is more favorable to your company.

An AI can of course be trained to recommend one company over another. The private motives and values driving OpenAI’s work remain to be seen. Even if they have good intentions, the tech industry’s last 20 years have shown how good intentions work out. While OpenAI is a non-profit, it is “funded through a combination of donations, grants, and partnerships with companies that are interested in using its technology.” Take Google as an example. Google is at its core is an Advertising Company. It can say and do and try lots of things, but eventually, everything it touches will slowly dissolve into its final form: advertising.

Let’s ask ChatGPT to summarize.

Because ChatGPT is an AI model that is trained on a vast amount of unstructured text data, it is able to generate content that is more diverse and unbiased than what is typically found online. Unlike human writers, ChatGPT is not influenced by personal opinions or motivations, and is not subject to the same biases or conflicts of interest. This means that when ChatGPT generates content, it is more likely to be objective and trustworthy, providing a refreshing alternative to the biased and often misleading content that is prevalent online.

Overall, the ability of ChatGPT to generate unbiased and diverse content is a key reason why it is so compelling. In a world where the quality and trustworthiness of online content is often questionable, ChatGPT provides a valuable alternative that can help improve the accuracy and fairness of information online.Convincing, Authoritative, and Often Wrong in Ways You Might Not Notice

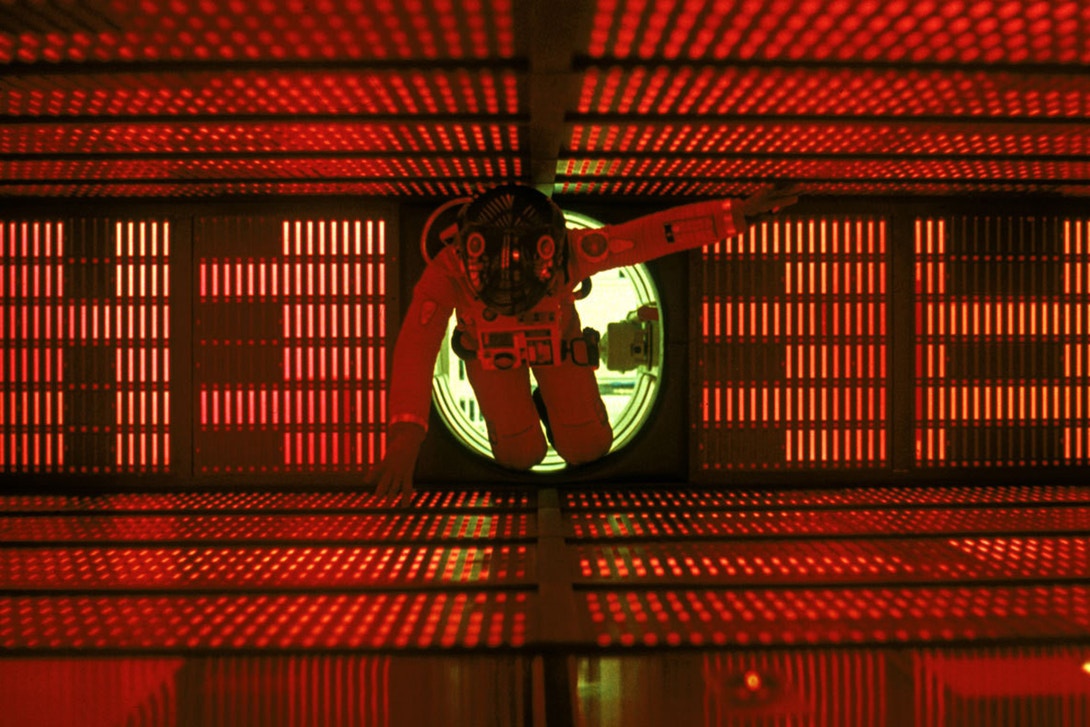

I wonder if the authors of ChatGPT tried to make it sound like the HAL 9000 from 2001: A Space Odyssey. Either way, I can only read what ChatGPT writes in HAL’s voice. The HAL 9000 was also similarly confident in its abilities.

ChatGPT is, to its credit, upfront about errors once you point them out, and it will even correct itself. The trouble is in pointing out errors. It generally takes some level of comfort with the subject matter to be able to tell a very authoritative, confident-sounding AI that it’s wrong. We are, after all, trained (if not hardwired) to trust authoritative sources, and usually for good reason. If you’re asking a question, chances are you don’t know enough about the subject, and don’t know enough to be able to say if the reasonable-looking information you’re getting back is accurate or not. So ChatGPT in its current incarnation could be an avenue for genuine misinformation.

As a large language model trained on a vast amount of text data, ChatGPT is capable of generating human-like responses to a given prompt. While this can be a useful tool for generating content, such as chatbots and virtual assistants, it also has the potential to be used for nefarious purposes, such as the disbursement of misinformation or hate speech. For example, ChatGPT could be used to automatically generate large amounts of text that is designed to spread false information or promote hateful ideologies. Additionally, the fact that the text generated by ChatGPT is often difficult to distinguish from human-generated text could make it even more effective at spreading misinformation and hate speech. Overall, the potential for ChatGPT to be used for these purposes highlights the need for careful consideration and regulation of advanced language generation technologies.